Top 5 Cyber Security Trends for 2026

Introduction: The Cyber Security Paradigm Shift

The world of cyber security is on the cusp of a fundamental transformation. For decades, the primary security model has been one of prevention building higher walls and stronger gates to keep adversaries out. But as we look toward 2026, this fortress mentality is proving insufficient. The digital landscape is shifting under our feet, driven by the exponential growth of artificial intelligence, which is being weaponised by attackers to create threats that are faster, smarter, and more elusive than ever before.

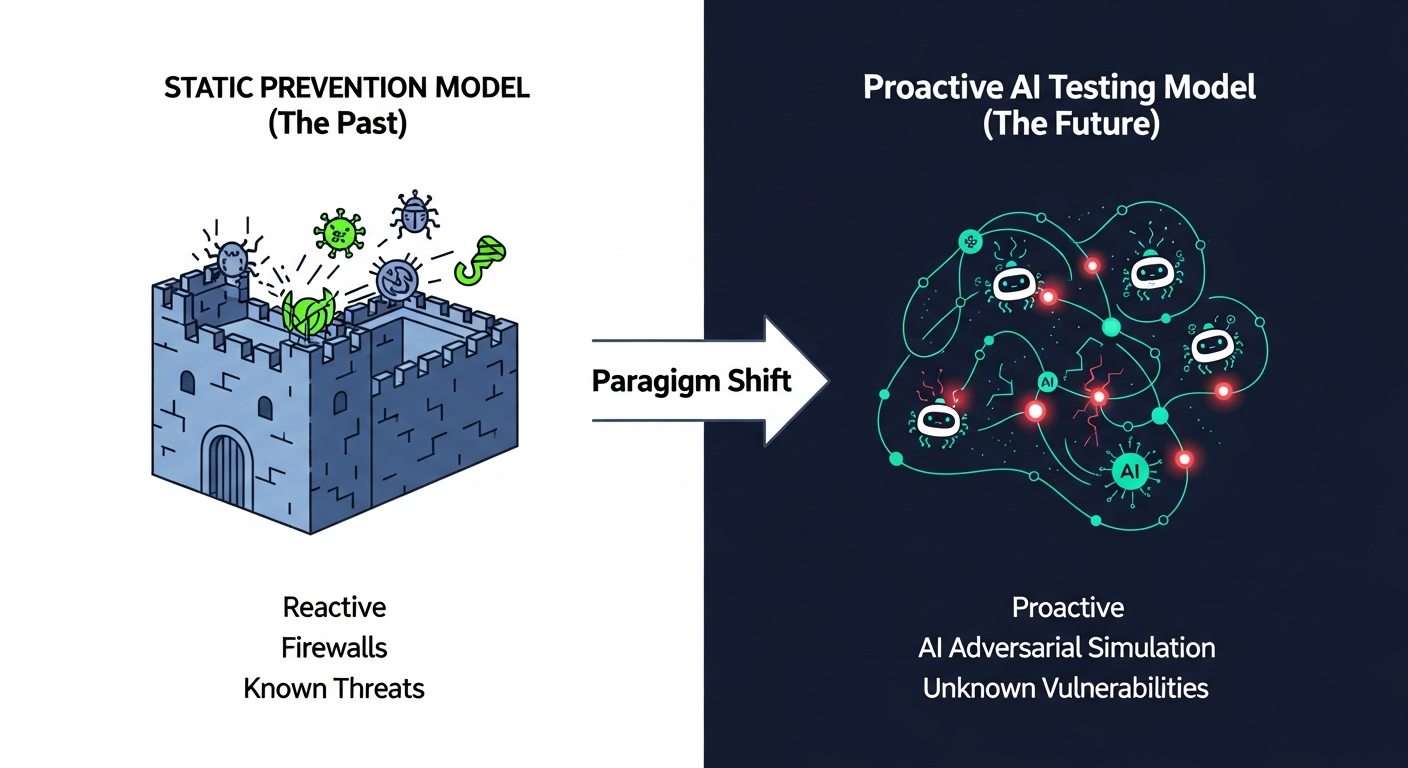

The Imperative: Shifting from Static Prevention to Dynamic AI Testing

The fundamental shift in cyber security: from a passive, fortress-like prevention model to a proactive, adaptive model powered by continuous AI testing.

In this new environment, waiting for an attack to happen is a losing strategy. The paradigm must shift from a passive, preventative posture to a proactive, adaptive one. The future of robust cyber security lies not just in defending against known threats, but in actively and continuously testing defenses against the unknown. This is where AI testing emerges as a critical discipline using artificial intelligence to simulate adversarial tactics, discover hidden vulnerabilities, and validate the resilience of systems before they are exploited.

What to Expect: A Glimpse into the Top 5 Trends

This article explores the top five cyber security trends for 2026, all revolving around this pivotal shift from prevention to proactive AI testing. We will examine why traditional defenses are falling short and how AI-powered adversarial simulation, predictive threat intelligence, autonomous vulnerability management, deepfake countermeasures, and accelerated incident response are reshaping the security model for the modern digital infrastructure.

The Evolving Threat Landscape in 2026: Why Traditional Prevention Falls Short

The core principles of traditional cyber security: firewalls, antivirus software, and intrusion detection systems were designed for a different era. While still essential components of a layered defense, their effectiveness is waning against a new generation of intelligent and automated threats. The fundamental challenge is that these preventative tools are primarily reactive, built to stop attacks that conform to known patterns.

AI-Powered Cyber Attacks and Threats

By 2026, the use of AI by malicious actors will be commonplace. Generative AI is already creating phishing emails and social engineering campaigns with unprecedented realism, bypassing human skepticism and technical filters alike. AI models can analyse a target’s network architecture to identify the most subtle vulnerabilities and craft custom exploits in real time. These AI-driven threats don't rely on a static signature; they adapt their behavior to evade detection, making them nearly invisible to conventional security tools until it's too late. An attack that once took weeks of manual effort can now be executed in minutes, overwhelming security teams.

Understanding the Shift: From Prevention to Proactive AI Testing

The move from prevention to AI testing is not about abandoning existing defenses but augmenting them with a new layer of intelligent verification. It represents a strategic acknowledgment that no defense is perfect and that resilience is best achieved through rigorous, continuous, and intelligent testing.

As we move toward 2026, five key trends underscore the critical importance of integrating AI testing into every facet of a modern cyber security strategy.

Trend 1: AI-Powered Adversarial Testing and Automated Red Teaming

Manual red teaming, where ethical hackers simulate an attack, is time-consuming and limited in scope. The first major trend is the automation of this process using AI. AI-powered platforms can now conduct persistent, 24/7 adversarial testing, continuously launching sophisticated but safe attacks against an organisation’s systems. These AI red teams can adapt their tactics based on the defenses they encounter, discovering novel exploit chains and providing a dynamic measure of security effectiveness over time. This allows a business to test its entire attack surface—from cloud infrastructure to employee access points—far more comprehensively than ever before.

Trend 2: Predictive AI for Cyber Threat Intelligence and Behavioral Intelligence

The second trend moves beyond reactive threat intelligence to predictive analytics. AI models can now analyze vast datasets from global threat feeds, dark web forums, and internal network traffic to identify emerging threats and predict future attack vectors. This predictive intelligence is then used to inform AI testing strategies. For example, if the AI predicts a new phishing technique is likely to target the financial services sector, it can automatically generate and run simulations of that specific attack against the organisation to test its resilience. This transforms threat intelligence from a passive information service into an active, forward-looking defense mechanism.

Trend 3: Autonomous AI for Vulnerability Management and Proactive Remediation

Traditional vulnerability management involves periodic scans followed by a lengthy manual process of prioritization and patching. The third trend is the rise of autonomous systems that use AI to handle this entire lifecycle. AI tools will continuously scan for, discover, and validate vulnerabilities. More importantly, they will use testing to prioritize remediation based on the actual risk an exploit poses to critical business systems. In some cases, these AI systems can even test and deploy patches automatically, drastically reducing the window of opportunity for attackers and freeing up security professionals to focus on more strategic initiatives.

Trend 4: Deepfake and Generative AI Countermeasures via AI Testing

Generative AI has enabled the creation of highly convincing deepfake videos, voice clones, and phishing communications, posing a significant threat to business security. The fourth trend involves fighting fire with fire: using AI testing to build resilience against these attacks. Security platforms will leverage generative AI to create realistic deepfake simulations for employee training, teaching them to spot subtle artifacts that betray a synthetic origin. Furthermore, AI testing can be used to validate the effectiveness of technical countermeasures designed to detect AI-generated content within email and communications systems.

Trend 5: AI-Accelerated Incident Response and Forensic Analysis

When a security incident occurs, time is the most critical factor. The fifth trend is the application of AI to accelerate every stage of incident response. This begins with AI-driven simulations that test and refine an organisation's response playbooks against various attack scenarios. During a real incident, AI tools can perform forensic analysis at machine speed, sifting through terabytes of log data in seconds to pinpoint the breach's origin, identify compromised systems, and map the attacker's movements. This dramatically shortens the time from detection to containment, minimizing the damage and cost of a breach.

Implementing AI Testing: Practical Steps for Organisations

Adopting an AI testing framework requires a strategic and phased approach. It involves more than just purchasing new tools; it necessitates a cultural shift toward continuous validation and a proactive security mindset.

Strategic Planning and Cyber Security Policies

Organisations must begin by integrating AI testing into their overarching cyber security strategy. This involves updating security policies to mandate continuous testing of new applications, cloud services, and infrastructure changes. Leadership must define clear objectives for the AI testing program, such as reducing the time to detect critical vulnerabilities or validating incident response plans quarterly.

Technology Adoption and Integration

The next step is to select and implement the right AI testing tools. This requires evaluating platforms that can integrate seamlessly with existing security systems, such as SIEM and SOAR solutions. The goal is to create a cohesive ecosystem where the intelligence gathered from AI testing automatically informs defensive configurations and triggers automated remediation workflows, creating a closed-loop security model.

Security Professionals Skill Development

The role of the security professional is evolving. Instead of manually searching for threats, they will become supervisors of AI testing systems, interpreting results, and focusing on strategic risk management. Organisations must invest in training programs to upskill their teams in areas like data science, AI model management, and threat simulation analysis to effectively manage these powerful new tools.

Challenges and Ethical Considerations in AI Testing

While powerful, the adoption of AI testing is not without its challenges and ethical responsibilities. Navigating these issues is crucial for successful and responsible implementation.

Bias and Accuracy of AI Models

AI models are only as good as the data they are trained on. If the training data is biased or incomplete, the AI testing model may overlook certain types of vulnerabilities or focus too heavily on others, creating blind spots in an organisation's security posture. Continuous monitoring and retraining of AI models are essential to ensure their accuracy and effectiveness.

Data Privacy and Compliance

AI testing platforms require access to sensitive system data to function effectively. Organisations must ensure that this access is governed by strict privacy controls and complies with regulations like GDPR and CCPA. Data used for testing should be anonymized where possible, and robust access controls must be in place to prevent the testing tools themselves from becoming a security risk.

Ethical Deployment and Responsibility

The same AI tools used for defensive testing could, in the wrong hands, be used for malicious purposes. Organisations have an ethical responsibility to secure their AI testing platforms and ensure they are used only for their intended purpose. This includes establishing clear rules of engagement for all testing activities and maintaining a transparent audit trail to prevent misuse.

Conclusion: Securing the Future with Intelligent Defenses

The cyber security landscape of 2026 will be defined by the escalating intelligence of both attackers and defenders. The traditional, static model of prevention is no longer sufficient to protect a modern business from sophisticated, AI-driven threats. The future belongs to organisations that embrace a proactive, adaptive, and intelligent approach to security.

A Call to Action for Proactive Security

The time to prepare for this shift is now. Business leaders and security professionals must work together to build a culture of continuous validation. This means investing in AI-powered tools, upskilling security teams, and integrating proactive testing into every stage of the development and operations lifecycle. By embracing AI testing, organisations can not only defend against the threats of today but also build a resilient and secure foundation for the challenges of tomorrow.

.png)

.svg)

.webp)