Phishing & Social Engineering Demystified: A Comprehensive Guide to Spotting Deceit

For years, phishing and social engineering have been their primary weapons. Now, with the dawn of the AI era, these threats have evolved, becoming more personal, persuasive, and perilous than ever before. Understanding this new landscape isn't just an IT issue: it's a critical life skill for every individual and a strategic imperative for every organisation. This guide will demystify these AI-enhanced threats, providing the knowledge needed to spot deceit and build a resilient defence.

The Foundation of Deceit: Phishing and Social Engineering Explained

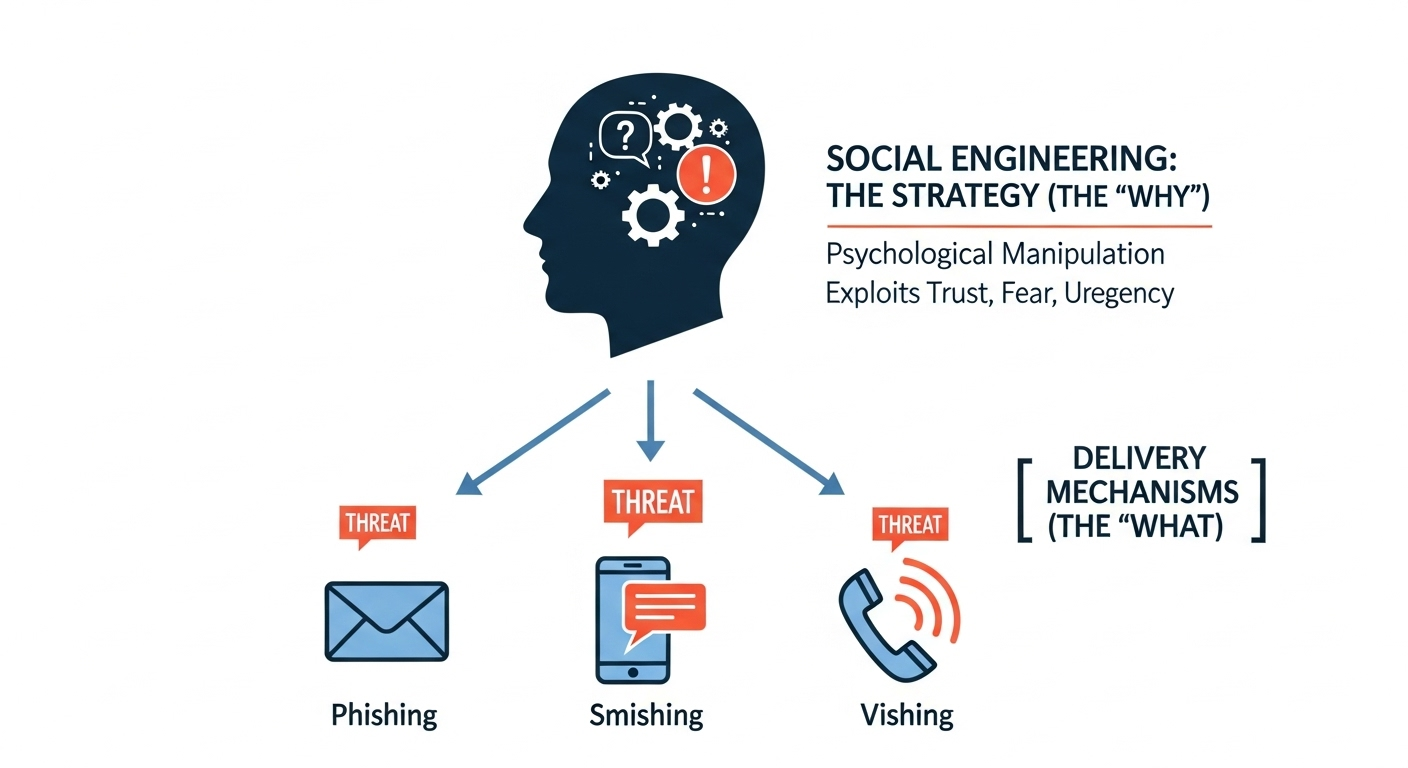

Social engineering is the overarching strategy of psychological manipulation, while phishing (and its variants like smishing and vishing) are the specific delivery mechanisms used to execute the attack.

At its core, every cyber attack that relies on deception is built upon two interconnected concepts: social engineering and phishing. Understanding their distinct roles is the first step toward effective cyber security.

Social engineering is the art of psychological manipulation. It is the master strategy behind the attack, targeting human emotions and cognitive biases such as trust, fear, urgency, or curiosity to trick a victim into divulging sensitive information or performing an action they shouldn't. Attackers who use social engineering are exploiting the fact that humans can be the weakest link in any security chain. They don't hack systems; they hack people. Their goal is to persuade an individual to willingly open a door that technology has locked.

Phishing, on the other hand, is the primary delivery mechanism for a social engineering attack. While social engineering is the "why" and the "how" of the manipulation, phishing is the "what" the tangible vehicle for the deceit. The most common form is the phishing email, a fraudulent message designed to appear legitimate. However, the attack can also come via text messages (smishing), voice calls (vishing), or direct messages on social media.

A classic phishing campaign might impersonate a bank, a popular online service, or even a colleague, using a carefully crafted narrative to provoke a desired response. The ultimate objective is typically to steal credentials (usernames and passwords), financial information, or install malicious software (malware) on the victim’s device to gain access to their systems. Together, these two elements create a potent threat, leveraging technological tools to execute a fundamentally human-centric attack.

How Machine Learning Amplifies Attacks

The principles of social engineering have remained constant, but the tools used to execute these attacks have undergone a radical transformation. The integration of Artificial Intelligence (AI) and Machine Learning has armed attackers with capabilities that represent a qualitative leap in deception, making their schemes significantly more effective and harder to detect.

Previously, many phishing emails could be identified by tell-tale signs: poor grammar, awkward phrasing, or generic greetings. AI-powered language models have all but eliminated these red flags. These tools can now generate perfectly fluent, contextually appropriate, and highly persuasive content in seconds. They can mimic specific writing styles, adopt professional tones, and craft narratives that are virtually indistinguishable from legitimate communications. For instance, security researchers have demonstrated the ability to use a single prompt in a tool like ChatGPT to generate a complete, functional password-reset phishing email and a corresponding fraudulent landing page in under a minute.

Furthermore, Machine Learning algorithms excel at reconnaissance and personalisation at an unprecedented scale. Attackers can deploy AI tools to scrape the internet and social media for information about their targets an individual's job title, professional connections, recent activities, or personal interests. This data is then used to craft "spear-phishing" emails, which are highly tailored attacks aimed at a specific person or organisation.

An AI-generated email might reference a recent project, a shared connection on LinkedIn, or an upcoming industry event to build credibility and lower the victim's guard. This level of personalisation makes the fraudulent request seem far more plausible, dramatically increasing the likelihood of a successful attack. The result is a new breed of threats that are not only grammatically perfect but also psychologically resonant, turning a generic trap into a personalised ambush.

Spotting Deceit in AI: A New Skillset for Individuals

As AI erodes the traditional indicators of phishing, the responsibility of detection shifts from spotting technical flaws to developing a more nuanced sense of digital skepticism. Individuals must cultivate a new skillset focused on context, verification, and emotional awareness to identify sophisticated social engineering attempts.

The first principle is to question the unexpected and the urgent. AI-powered attacks are designed to trigger an immediate emotional response—fear of an account being locked, excitement about a prize, or pressure from a supposed superior. Before clicking any link or downloading an attachment, take a moment to pause and think critically. Ask yourself: Was I expecting this email? Does this request make sense in the context of my relationship with the sender? Legitimate organisations rarely use high-pressure tactics to request sensitive credentials or demand immediate financial action via email.

Next, adopt a policy of "trust but verify" through a separate channel. If you receive an email from your bank, a service provider, or even a colleague asking for information or directing you to a login page, do not use the links or contact details provided in the message. Instead, navigate to the official website by typing the address directly into your browser or use a bookmarked link. For internal requests within an organisation, call the person on a known phone number or contact them via a different platform like an internal chat tool to confirm the request is legitimate. This simple act of independent verification is one of the most powerful defences against impersonation.

Finally, scrutinise the technical details that AI cannot fully control. While the email content may be flawless, the attacker still needs to manipulate the underlying infrastructure.

- Inspect the Sender’s Email Address: Attackers often use domains that are subtly different from the real one (e.g.,

microsft.cominstead ofmicrosoft.com). Look for small misspellings or unexpected domain extensions. - Hover Over Hyperlinks: Before clicking, move your mouse cursor over any links to reveal the true destination URL. If the URL displayed doesn't match the legitimate domain of the purported sender, it is a major red flag.

- Be Wary of Attachments: Unsolicited attachments, especially those with generic names or unusual file types (.html, .zip), can contain malware. Do not open them unless you have verified the sender and the context.

Cultivating these habits, pausing to think, verifying independently, and inspecting the technical details creates a multi-layered personal defense that is far more resilient to the sophisticated deceptions of the AI era.

Protecting Against AI-Enhanced Cybercrime

While individual vigilance is the first line of defence, a robust cyber security posture requires a comprehensive, organisation-wide strategy. Protecting an entire organisation from AI-enhanced social engineering threats involves a blend of advanced technology, resilient processes, and, most importantly, a deeply ingrained security-aware culture.

Technological defences are the foundational layer. Modern email security gateways now employ their own Machine Learning algorithms to analyse incoming messages for signs of phishing. These tools can assess a vast array of signals, including sender reputation, link authenticity, and language patterns indicative of social engineering, to quarantine threats before they reach an employee's inbox.

However, technology alone is insufficient. Resilient processes must be established to thwart attacks that bypass technical filters. A key area of vulnerability is financial transactions and data requests. Organisations should implement and enforce strict multi-person verification protocols for any request involving wire transfers, changes to payment details, or access to sensitive systems. For example, a request to change a vendor's bank account information received via email must be confirmed through a phone call to a pre-established contact number for that vendor. This creates a human firewall that disrupts the attacker’s plan even if the initial phishing email was convincing.

Conclusion: The Future of Deception and Defense: An Ongoing Evolution

The emergence of AI-driven social engineering marks a significant escalation in the ongoing battle for cyber security. We are entering an era where the lines between authentic and fraudulent communication are becoming increasingly blurred. The threats will continue to evolve as attackers refine their AI tools to generate convincing deepfake videos for vishing, create highly autonomous conversational bots for social media attacks, and adapt their campaigns in real-time based on a victim's responses.

In response, our defences must also evolve. The future of cyber security will be an arms race between malicious AI and defensive AI. Security tools will become more predictive, using machine learning to identify anomalous communication patterns and anticipate attacks before they launch. Individuals and organisations can no longer afford to be passive targets.

For individuals, this means treating digital skepticism as a core skill, constantly refining the ability to question and verify information encountered on the internet.

For organisations, it requires moving beyond static defences and investing in a dynamic security culture that promotes awareness, critical thinking, and proactive reporting.

By demystifying these advanced threats and fostering a proactive security mindset, we can build the resilience needed to protect our digital lives from the sophisticated deceit of the AI era.

Here at Pentest People, we offer phishing assessments and social engineering assessments to help organisations identify and mitigate the human vulnerabilities within their security posture. Our colleagues at WorkNest can support with all aspects of employee data protection, including breaches, ICO notifications, SARs, audits, and policy reviews. Find out more here.

.png)

.svg)

.webp)